Weaviate Verba RAG with Node-Red & Ollama Engine

Been absent a while, have had many things to be focused on; however, this recent little nugget needed to be documented & shared, mainly because I did this on my personal laptop & I need to recreate it somewhere else and this mechanism just makes it easier - also, this might help someone else out too.

Right, so what am I talking about?

About a year ago I was doing some new stuff with LLMs and RAG (ingesting own documents as the data to use rather than the LLM training data) and it was okay-ish, it did the job. Zoom forward a year and obviously things have moved on, quite a bit.

The RAG tools & code have improved significantly, it still takes time to ingest though - haven't found a way to speed that part up, well, I'm focused on offline/airgapped/onpremise solutions, it could probably be faster if using a Cloud SaaS offering, but that is of no interest to me, so I'll accept the time it takes.

What are the steps inolved?

Get a bunch of documents, upload them to be chunked up into 500 token sized chunks of data, get that data to be stored into a Vectorstore (flashy database of numbers), so that when a user asks a question (or creates a prompt to get an answer), the Vectorstore can be quizzed "in context" of being able to provide a response that is sensible, meaningful & appears to be intelligent.

Although, as I say, the actual chunking up of the data and ingesting does take some time, the actual querying is SUPER FAST. I mean FAST FAST. Sub, if not seconds. I need to test this on 10,000 documents, but for now I've done the "coder" thing and just used 3 documents as a tester.

Once I get the relevant document context snippets returned, I then bundle that together into a specially written prompt that I then pass to the Ollama LLM Engine to get the "AI" to generate an intelligent answer. What follows is running on, quite an old (3years?) laptop with limited spec, a Raspberry Pi 5 is probably more powerful, which is what I'm going to port this over to as a test later.

Step 1: well, I just upgraded the laptop to UBuntu 24 and it does all still work - shock!horror! which up'd the Python version to 3.12, so technically I was installing fresh into this environment.

I did hit the EXTERNALLY_MANAGED error when attempting to do the "pip" command, I didn't bother trying to fix it properly, so I just opted for a naughty-fix:

$ cd /usr/lib/python3.12/

$ mv EXTERNALLY_MANAGED EXTERNALLY_MANAGED.old

Yes, not the best - but it works :-)

For the RAG processing, we're going to use VERBA : https://github.com/weaviate/Verba

It is an app provided by WEAVIATE (the people who make the VectorStore itself, so why not?!)

Welcome to Verba: The Golden RAGtriever, an open-source application designed to offer an end-to-end, streamlined, and user-friendly interface for Retrieval-Augmented Generation (RAG) out of the box. In just a few easy steps, explore your datasets and extract insights with ease, either locally with HuggingFace and Ollama or through LLM providers such as OpenAI, Cohere, and Google.

We're only interested in using the local offline Ollama LLM models & it handles that just fine.

To install, as I'm a native kinda guy, I just install it raw; however, if you want a bit of protection you can use a docker container - which is probably better if you have it installed & are using it for personal usage, etc..etc...

Then it's just a case of doing the following:

$ pip3 install goldenverba

I did have a couple of hiccups with some library versions needing to be specific but they were easy to fix, just uninstall the one's that were there and install the specific versions that were needed. You might not need to do this, but recording here just incase:

$ pip3 uninstall tiktoken

$ pip3 install tiktoken==0.6.0

$ pip3 uninstall openai

$ pip3 install openai==0.27.9

Once Python has done its thing, you just need to set some local variables to tell the tool to use Ollama.

I opted for using the tinyllama model, but you can use any of the one's you've downloaded. Also, you might want to make those settings permanent so they don't need setting after a reboot.

Now, all you need to do is run the web app from the CLI:

$ verba start

You'll note some permissions related errors / warnings - I didn't investigate further, it didn't seem to make much difference, just something to be aware of if it does cause a problem later.

Now, open a web-browser to http://localhost:8000

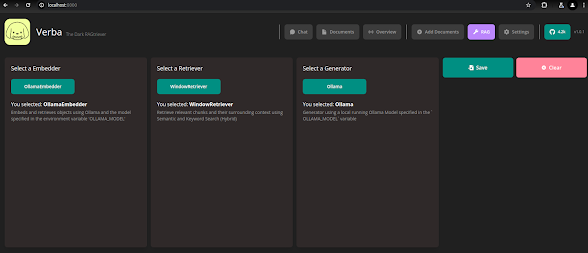

First things first, go check the Overview settings to make sure that Ollama environment variables were picked up okay:

Now, go check and change the RAG settings values:

As you can see the interface is nice & clean - this interface / UI is NOT really for an end user to use, but this is a really good way of being able to manage as an admin person importing and making the RAG documents available - we'll look in a minute how to use Node-Red as a potential user front-end to call the APIs.

Now, I already added a couple of documents, if you click the Documents tab you will see them:

To add the documents did confuse my simple brain to start with, you go to the Add Documents tab:

Press the [Add Files] button, bottom-left, make sure you set the Chunk size to something reasonable - I think there is a 1000 chunk size limit, select the embedder model (now, this can potentially be useful later to change for multi-lingual processing, but we'll get back to that) and then when you press [IMPORT] you'll see some output underneath that section giving you progress.

You'll also see output in the CLI window showing the ingestion progress bars too.

To quiz the ingested documents you then click the Chat tab:

As you can see above, the list of documents in relevance order (there is a value that you will see in the JSON response in the next screenshot), you can see just the context, ie. the relevant part of the document, but you can also click to show the whole document, which is really nice - it does still keep the green box around the relevant context section too - which is a great visual learning tool.

You can then click through the documents in the middle to see the context values and then you'll see the CPU usage ramp up for a bit and then on the right hand side, you'll see the "AI Response" from those context snippets. This is the Ollama LLM model answer, as you can see it did return quite a bit of a response, even though I was using tinyllama.

Within the web-browser Inspect mode, you can see what the API calls are doing behind the scenes, so you can see what is being passed as a POST to the /api/query and what response is returned:

This will give us a sneak-peak on how we can now use Node-Red.

Before then, a quick sneak into the git-hub repo will show us where the APIs live:

Once you dig in a little further, you'll see that the "AI response" part is actually done with WebSockets, so not an API call really, as the response is streamed back to the UI - however, if you think logically, we don't need to do that step, we can call the Ollama LLM directly.

I'm not going to go through an explanation of Node-Red, but assume you've got it setup and ready to go already - if not, why not? go do it. do it now.

Here's a quick Flow that can be split into 2 parts. The top part sets the scene for calling the /api/query REST API and extracting the resulting output, as shown here:

[set query]

[call api]

[convert payload to object]

[extract results]

nothing too complex or tricky about any of that.

Now for the fun part. We now have, if we want it, the document information, but we also have the context text.

[call ollama directly]

Here, I've provided a snippet at the top of how you can call the REST API of the Ollama LLM Engine from the CLI. Try it, it works.

Then I pasted a snippet from within the Verba source-code on how they tweak the prompt in Python.

Underneath, I set the msg.payload object with the values we need to pass to the Ollama API. I'll mention the "request_timeout" here as I do not believe it can actually be set. Why is it there? Well, before I remade the "prompt" output, just passing the prompt in the way I was meant that Ollama actually hit the timeout limit, which I believe is 5 minutes? and then threw an error. I was attempting to do a workaround. In reality, this is a bit silly. If the prompt is taking more than 5 minutes, something needs to change, either better hardware, usage of GPUs etc..etc... anyway, I left it in just 'cos.

The "prompt" I left in actually works really well and does have a much shorter response time with a semi-decent response too, as we'll see in the debug output in a minute.

Now this is the output from the first node [payload to object], as you can see the values are returned under the payload as an object, however the payload.response value is a STRING, even though it actually contains JSON, so we drop another node in that specifically changes that string to an object, like so:

That then gives us a better object to process:

We now have ALL of the information from before and now we have a response, so we can do anything we want with all of that information.

For the eagle-eyed, you'll notice that the QUERY in the snippet above doesn't actually match the query that I originally put into the first node. Why did it change? Well, that's the bit I'm now going to investigate further.

It's never finished is it :-D

The good news is though, I'm writing this up so I can share it with others and myself and let's see where it goes

UPDATE:

Okay, whilst it was a nice exercise, it sucked performance wise. It just took too long to ingest documents, it might be "better" but came at too much of a cost.

Other thing was the oddity with the web-sockets, I attempted to replicate the code, extracting out the steps and dropping into node-red, however, the responses I then got from the LLM never matched the Verba tool responses, no matter what I tried.

That was a blocker right there. Therefore, going to park this for now & come back to it at a later date.

Comments

Post a Comment