Raspberry Pi 5 & offline LLM (Ollama.ai)

Well, it's been about a year since I was writing some python code (yes, I did type that & admit that I did that & to be fair it worked okay....as cardboard code), I was using Llama.cpp, langchain and I wrote RAG and COT code. I was quantizing my own model on the laptop that I was using, therefore I was fully exploiting the 64CPUs, 16GB GPU & 128GB RAM and was then pushing the boundaries with that spec when using the streaming Q&A, ingesting my own documents & storing in a Chroma vector store and then questioning the content....I actually overloaded and crashed the Windows 10 OS on the laptop & it needed a fresh re-build afterwards... it never quite worked the same.

Anyway, my point being I was chuffed that I could run a Llama LLM offline on a laptop & it worked pretty reasonably, I was using code that I'd written & it was doing okay. I attempted to explain it to other people & it turns out it was too complicated...

Then a very early version of privateGPT appeared, so I re-purposed that code, modified it & tweaked it & then took a direction to use the GPT4All model which seemed to work well..... then I wrote a python gradio ui that worked very well, however it was still a single-user experience & I wasn't going to take the python code further to handle the document upload stuff... it was good enough, simple enough for other people to understand and basically I then got bored, handed over the code to other people to work with...

I then decided to sit back on the fence in reference to LLMs... grabbed some popcorn & focused on some other things. Turns out that was a good idea. By attempting to keep up with the latest changes, that were happening on a daily basis, it was starting to distract me far too much & I decided that it was simpler to step back, keep an eye on things and then wait for some dust to settle.

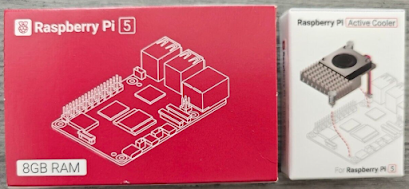

Then I got a Raspberry Pi 5 8Gb device in Nov 2023...I installed UBuntu and did some other work with the device and now it's the end of Jan 2024 that work has been wrapped up, so I thought I'd take a look at how things have moved on and I wondered if I could take last years code, or a variant of it, and run it on the Raspberry Pi 5 device.....

Along has come ollama. wow, that has made life so much simpler / easier!

Here's some screenshots, more for me as a reminder, that show ollama being setup / used on the RPi 5 as well as using a chat UI interface, the ability to use langchain and being able to use Javascript too....

I'm going to take this a bit further now, as these examples showed that the responses were FAST, you may think 2-5tokens a second is NOT fast, but trust me, when I was using the original laptop it was taking me 100's of seconds to get a response that now takes 1-2seconds.

Ah, talking of the original privateGPT, this is how I can use this as a skeleton to morph my previous code to use ollama and langchain, via Javascript this time - however, looking at the python code above, it looks super simple.

This might be about the right time to step back into the LLM world running on Edge devices...RPi 5 is a good start, then the Orin and then I reckon it'll be time to run on Android and iOS platforms - who needs all that "Cloud" bullsh*t? no, that is so 2014....

Here's the future... it looks a lot like 2001 :-D

Comments

Post a Comment