super simple UI for your GPT

okay, so I wanted a super simple UI as a front-end for a localGPT / privateGPT / offline GPT model.

I didn't want to write a python flask app - as I am so against that as a reality.

I didn't want to write a nodeJS app, as that was just way too much effort.

I didn't want to learn the latest (this weeks fave) JavaScript Framework.

I didn't want to messa round with ReactJS / <insert other names here>.

I just wanted a SIMPLE UI tool.

I had a couple of suggestions.

I actually wanted to use node-red uibuilder (and probably will do in the future).

I was erring on begrudgingly using python in some form or other as the existing interaction code to the localGPT (basically a ChromaDB that was populated with content using a gpt4all model as the baseline for the embeddings - if none of that made sense, you best stop reading now)

........

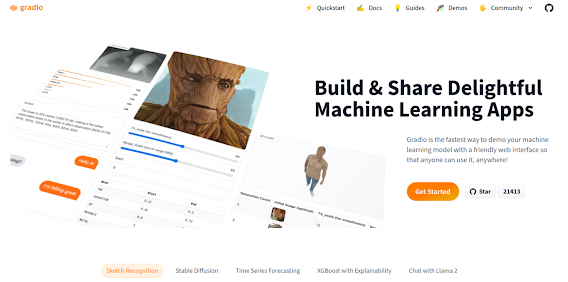

I then stumbled over this gem of a super simple UI tool. Gradio.app

....how simple?Just a pip install, some super simple code and voila UI.

Yes, it may not be performant for 100,000 concurrent users (it might, I've not checked!), but I don't need it for that, I need it for a couple of test users, therefore it'll do.

I setup up the demo/sample UI in a few minutes.

A little bit of a fiddle & tweak, using the OOTB demo import of the state_of_the_union.txt and a little bit of a fiddle with some python code and it's just a case of running from the CLI:

We load the GPT4All LLM, to then use it to search for the question (within the local ChromaDB)...and there's the UI, I asked the question, pressed the [SUBMIT] button and it's now "thinking":

Note: the timer in the top right of the output windows, that's a neat touch, it consistently shows that with this setup, this query takes 69-72seconds, however, when it has finished, that timer is removed - so I have code that outputs the time taken to the stdout.

And, here is the output - I've got the ANSWER at the top, but I've also shown the 4-results, basically the whole JSON object output. Now, I can faff about and make that look prettier and potentially make it do all sorts, but, the key thing here is I did NOT spend weeks making a UI to do this, I spent the time doing the backend/plumbing, making the GPT work properly, the UI is secondary, hence not really caring about how long it takes and the quicker the better.

I'm pretty F***ing impressed with that as a UI - literally took me about 10 minutes to go from nothing to that interface.

sweet.

yes, it IS basic - that was the point and if I now want to carry on and do more advanced things (also beyond using langchain RetrieveQA API call), I can... and probably will.... next week.

well, there you go....

after spending 5 mins skimming through the online docs, I found a specific section about Chatbot UIs and using a local GPT. Naturally, I found this AFTER I wrote the code above, but it's good to know there are some specific extras that can be done.

...

...and no, I don't know what the [FLAG] button is about? I need to work out how to make that go away

UPDATE: okay, literally, 1 min after writing the above sentence a quick search revealed THIS PAGE - okay, that explains the usage of the [FLAG] button... actually, I might leave it in, for now

...okay, for safe-keeping here's the generic code:

Comments

Post a Comment