3D Printed head and some further OpenCV and a bit of Servos

I spent a day or two playing around with a variety of things that you can do with OpenCV in relation to images / videos and recognition / tracking. Interesting stuff. I also got distracted as I foolishly decided to run a linux update on my PiCade and thereby rendering it bricked. It burnt many many hours trying to revive it, but alas it was dead beef. I then opted for a fresh new installation, which had it's won quirks to overcome, mainly the recognition and setting of the Volume (100% is not ideal!), then I had to collate all of the files I had scattered across a variety of locations on my 4TB NAS... needless to say, now it's all setup & functioning as it should, I've backed up the SDCard and stored that on the NAS, if anything ever happens again, I can just restore back the 64Gb in an hour and job done.

I did the un-thinkable and used Python code to test out some options - yes, it was most definitely 'cardboard code', it was to test how things should work first and then I ported it over to C/C++, after I'd taken a metaphorical coding decontamination shower.

As you can see here, I was playing with getting outlines via livesketch:

Then I was playing with the concept that once you'd detected a face, zoom in on it - I believe it was keeping track as I moved my head around, what was slightly bizarre was the fact the left eye was flipping the image horizontally when it zoomed it, most confusing:

I modified the code to ignore the background and only work on the foreground, which was interesting to work with visually.

That gave rise to pondering if I could "mix" one eye doing one thing and the other doing another, but both doing the face detection, yep, sure could:

But..... still no real difference with that CPU usage:However the OOTB code for using the HAAR detection was still sky-rocketing the CPU usage.

I removed the HAAR face detection code and I could see a huge drop in CPU usage:

and if I added it back in, back up it went...

So I thought I'd go dig a little deeper as to what it was actually doing that was causing the huge demand on the CPU.

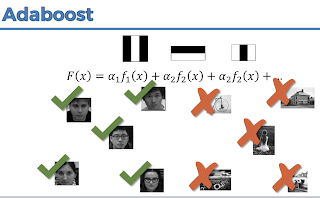

Here's a couple of screenshots from the Udemy courses I referenced last time, the take away here is - there are X amount of "features" that have been determined that make up a face and these are as crude as looking for blocks of pixels that adhere to those "features", for instance, eyebrows, eyes, nose, mouth etc... as you cannot "look" at the whole picture in one go, you have to split the image up into sections - that's the picture I like below with the boats in, the green dots give you an idea of this in practice, if you image you move to each of the green dots and then you "scan" within that region looking for "feature" matches then you move onto the next one and so forth.

Ah ha! so, that makes a lot more sense as to why this is so CPU intensive, I've been asking the poor little Raspberry Pi 4 to perform that level of intense feature scanning on EVERY frame transmitted from the webcam! When you look at it like that, it is actually rather impressive that the Raspberry Pi 4 can achieve this task without burning itself out after 2minutes!

As I've said before, nothing I'm doing here is anywhere near any form of rocket-science, if you Google/Duck Duck Go/Bing or YouTube a search, you'll get tons of hits varying from 3minute videos to 50minute videos, to Udemy courses (that just lift/repeat what the OpenCV docs show you, but if you've not read them you wouldn't realise). In fact, one of my Critter devices I built a few years back was using an ultrasonic sensor, a PIR sensor and a pan/tilt sensor with a Pi Cam to drive itself around. I just wasn't doing any OpenCV image processing as it was running on a PiZero that was/is very limited in power.

My questions were indeed answered and I now fully realise that there is a LOT going on, or a LOT that I'm asking the poor little Raspberry Pi 4 to process in real-time. I do have a few options if continuing with HAAR and that is to stagger the frame rate capture, for instance, only grab a frame every 10th frame and use that as a basis to process on? Also keep the previous frame, do a compare if nothing changed, then don't do any processing etc... Alternatively, use a different method / approach to performing face detection / recognition.

This guys web page (note, it was from July 2018, had I stayed focused with the 3D printed head and what I wanted to do back then, rather than focusing all my efforts on work/work, I would have been doing this stuff back then 18months ago, but hey-ho, we all go at our own speed....and I was also building my Kustom Car at the time too, so I have an excuse!)

https://www.pyimagesearch.com/2018/07/30/opencv-object-tracking/

As he mentions that are 8, yes, EIGHT separate object tracking implementations within OpenCV itself. I won't repeat the article, but the KFC (yes, I know I typed it wrong, I'm hungry!), KCF tracker might be the one that I need to have a look at for what I want to achieve.

"Use KCF when you need faster FPS throughput but can handle slightly slower object tracking accuracy"

or maybe I'll need to use CSRT:

"Use CSRT when you need higher object tracking accuracy and can tolerate slower FPS throughput"

https://www.pyimagesearch.com/2018/07/30/opencv-object-tracking/

If I step back and ponder what my original requirement was - it was for the 3D printed head to "detect" that a person was within its field of view, to greet that person (if we could identify who it was) and to then keep track of the movement of the person, moving the 3D printed head via the servos.

That last part can easily be achieved with normal Object tracking - for instance, I have a 1999/2000 ERS-111 AIBO that has it's "pink ball" that it does the same thing with, you "show" it the ball, it detects it is pink and therefore it's ball and then you can roll the ball around and it tracks it and "plays" with it. We can achieve that same thing now very easily, in fact here's a great little video of someone showing you how to do just that (except they use a "red ball", but the exact same concept, they also use servos to move the webcam around to keep the "red ball" in the vision center):

Hmmmmm..... lots of food for thought on how to do the "boring" stuff. I'll no doubt swing back around to this little challenge and figure something out. Now, onto the "fun" stuff...

Servos

As you can imagine, I do actually have rather a lot of servos of all shapes and size that I have accumulated over the years, the most financially painful was the purchase of all the hobbyking servos for the complete InMoov robot, yeah, I got carried away (>50 servos) and they are NOT the small ones. I think my logic at the time was, if I purchased them, it would force me to use them and therefore make the whole robot. Little did I appreciate the trials and tribulations of getting the 3D printer to play ball and my tolerance level for bed levelling....

I did a bit of looking around and I found that quite a few people were using an Arduino to handle the servo interface work, here is one such INSTRUCTABLE.

As I'm using a Raspberry Pi, I thought I'd purchase a Servo interface board specifically for it.

The board is MonkMakes servo board and it's super simple to get up and running, here's the PDF.

Here's the Python library that is used to test the servo(s). AngularServo.

As I mentioned before, having a nice double-table workspace is good & bad....Here is the code after a tiny tweak and we now have synchronised servos:

As you can see, simple enough to get up and running. The next step will be to morph this into the C++ code with the facial recognition or even just face/object detection and then add the tracking capability and then tweak the code to handle the servos in the 3D printed head.

As I glanced over, I noticed one of the old Critter robots (slightly worse for wear and missing some parts now!) and I see that I actually have a PCA9685 servo controller board attached to it:

Now, that is interesting, I wonder if I can control MORE than 6 servos? The 3D printed head has 2 big servos inside it to move left/right and to open/close the jaw and there are another couple of servos in the face/eyes to move the eyes left/right and up/down... Maybe, I'll just stick with controlling the 4 using the MonkMakes board for now....but that PCA9685 does look like it has the capability to control 16 servos! If that were the case, I might opt for passing off the servo control to an Arduino..... anyway, as usual, I'm distracting myself.

.....

btw - for the entire time of doing all of the above, I've been driving myself nuts as I have a Wifi extender thingy that I originally had plugged into an amcrest webcam down the end of my garden, I moved it back into my home/office when I was using a customer laptop that was wifi crippled, so network cable connection only and it was plugged into the wall socket just over there for months.... it's no longer in the wall socket and for the life of me, I cannot find it, I've turned the place upside down... it is here, in this house, somewhere! Why do I "need" it?.... cough cough.... well, I "found" my old Handbook 486 with WiFi card and it all still boots to DOS and works fine and I just need the Wifi extender to be able to plug into the "old" Wifi router (as it's not got new fancy WEP2)...anyway, it was meant to be just a bit of fun to see if a project from 6 years ago was still operational, but it seems to be niggling at my brain... the device is somewhere around here...

Comments

Post a Comment